Introduction:

Data source: https://www.thaihometown.com/

Collect data: recent condo rental information in popular areas of Thailand (Bangkok, Chiang Mai, Phuket)

Use tools/third-party libraries: Python, requests, BeautifulSoup, pandas, re

Main Program:

import requests

from bs4 import BeautifulSoup

import time

import random

from user_agent import user_agent_pool

import re

import pandas as pd

#use a random user_agent to aviod detect

headers = {

'User-Agent': random.choice(user_agent_pool),

'Referer': 'https://www.thaihometown.com/',

}

#stored info: condo_name, price, number_of_bedroom, date, area, city

info = {

'room_size': [],

'price': [],

'number_of_bedroom': [],

'date': [],

'city': [],

'area': []

}

cnt_dict = {}

session = requests.session()

#request the first pqge

def parse(url):

try:

html = session.get(url, headers=headers, timeout=5)

if html.status_code == 200:

extract(html.text)

else:

print('ERROR{}'.format(html.status_code))

except Exception as e:

print(e)

#get the detail url of each page

def extract(data):

try:

deep_url = []

soup = BeautifulSoup(data, 'lxml')

url_set = soup.select('[id*=mclick]')

for i in url_set:

if "ให้เช่า" in i.select_one('.pirced').text:

date_info = i.select_one('.dateupdate5').text.strip()

month, year = get_date(date_info)

cnt_data(month, year)

if cnt_data(month, year):

detail_url = i.select_one('a')['href']

deep_url.append(detail_url)

request_deep(deep_url)

except Exception as e:

print(e)

#request the detail page

def request_deep(urls):

try:

if len(urls) != 0:

for url in urls:

deep_html = session.get(url, headers=headers, timeout=5)

if deep_html.status_code == 200:

#set the sleep time to control the speed of scraping each detail page

time.sleep(random.uniform(0.5, 2))

collect_info(deep_html.text)

else:

print('ERROR{}'.format(deep_html.status_code))

except Exception as e:

print(e)

#get the info needed

def collect_info(html_data):

try:

deep_soup = BeautifulSoup(html_data, 'lxml')

# condo_name=deep_soup.select_one('.headtitle2br').find_next_sibling(string=True).strip()

info_set = deep_soup.select('.namedesw12 tr')

date = deep_soup.select_one('.namedesw9 .datedetail').text.strip()

#clean the data to save only the info we need

date = re.findall(r'\d+ .+ \d+', date)[0]

for i in info_set:

if 'พื้นที่ห้อง' in i.text or 'ขนาดห้อง' in i.text:

room_size = i.select_one(' .table_set3 .linkcity').text.strip()

if 'ราคา :' in i.text:

price = i.select_one('.table_set3PriceN .linkprice').text.strip()

#get only the number we need

price = ''.join(re.findall(r'\d+', price))

if 'จำนวนห้อง' in i.text:

room_type = i.select_one('.concept_type').text.strip()

#studio considered as 1 bedroom

number_of_bedroom = 1 if 'ห้องสตูดิโอ' in room_type else int(re.findall(r'\d+', room_type)[0])

if 'จังหวัด :' in i.text:

city = i.select_one('.linkcity').text.strip()

if 'อำเภอ :' in i.text or 'เขตที่ตั้ง :' in i.text:

area = i.select_one('.linkcity').text.strip()

for key, value in zip(info.keys(), [room_size, price, number_of_bedroom, date, city, area]):

info[key].append(value)

except Exception as e:

print(e)

#save the info we get into a CSV file

def save_info(info):

try:

df = pd.DataFrame(info)

df.to_csv('condo_info_bkk2.csv')

print('Save Information Done')

except Exception as e:

print(e)

#for these 2 functions are to limit the size of the dataset

#limit the data size:

def get_date(date_info):

date = re.findall(r'\d.+', date_info)[0]

month = date.split(' ')[1]

year = date.split(' ')[2]

return month, year

def cnt_data(month, year):

if year not in cnt_dict:

cnt_dict[year] = {}

if month not in cnt_dict[year]:

cnt_dict[year][month] = 0

#only get maximum 100 rows for each months

if cnt_dict[year][month] >= 100:

return False

cnt_dict[year][month] += 1

return True

if __name__ == '__main__':

for i in range(32, 400):

#Phuket

# url = f'https://www.thaihometown.com/condo/{format(i)}/tag/%E0%B9%83%E0%B8%AB%E0%B9%89%E0%B9%80%E0%B8%8A%E0%B9%88%E0%B8%B2%E0%B8%84%E0%B8%AD%E0%B8%99%E0%B9%82%E0%B8%94_%E0%B8%A0%E0%B8%B9%E0%B9%80%E0%B8%81%E0%B9%87%E0%B8%95'

#Chiangmai

# url = f'https://www.thaihometown.com/condo/{format(i)}/tag/%E0%B8%84%E0%B8%AD%E0%B8%99%E0%B9%82%E0%B8%94%E0%B9%83%E0%B8%AB%E0%B9%89%E0%B9%80%E0%B8%8A%E0%B9%88%E0%B8%B2_%E0%B9%80%E0%B8%8A%E0%B8%B5%E0%B8%A2%E0%B8%87%E0%B9%83%E0%B8%AB%E0%B8%A1%E0%B9%88'

#Bangkok

url = f'https://www.thaihometown.com/condo/{format(i)}/tag/%E0%B8%84%E0%B8%AD%E0%B8%99%E0%B9%82%E0%B8%94%E0%B9%83%E0%B8%AB%E0%B9%89%E0%B9%80%E0%B8%8A%E0%B9%88%E0%B8%B2_%E0%B8%81%E0%B8%A3%E0%B8%B8%E0%B8%87%E0%B9%80%E0%B8%97%E0%B8%9E%E0%B8%A1%E0%B8%AB%E0%B8%B2%E0%B8%99%E0%B8%84%E0%B8%A3'

parse(url)

print('Collect Information Done')

#set the sleep time to control the speed of scraping each page

time.sleep(random.uniform(0.5, 2))

save_info(info)

Data clean

import pandas as pd

import re

month_transfer = {

'มกราคม': 1,

'กุมภาพันธ์': 2,

'มีนาคม': 3,

'เมษายน': 4,

'พฤษภาคม': 5,

'มิถุนายน': 6,

'กรกฎาคม': 7,

'กรกฏาคม': 7,

'สิงหาคม': 8,

'กันยายน': 9,

'ตุลาคม': 10,

'พฤศจิกายน': 11,

'ธันวาคม': 12

}

df = pd.read_csv('condo_info_bkk2.csv', index_col=0)

#clean the column "date":

pattern = r'┃.*'

def date_clean(data):

return re.sub(pattern, '', data)

df['date'] = df['date'].apply(date_clean)

df[['day', 'month', 'year']] = df['date'].str.split(expand=True)

df['month'] = df['month'].replace(month_transfer)

df['year'] = df['year'].astype(int) - 543

df.drop(columns=['day'], inplace=True)

#clean the column"room_size"

df['room_size'] = df['room_size'].str.replace('\s\D+', '', regex=True)

#clean duplicate and none:

df.drop_duplicates(inplace=True)

df.dropna(inplace=True)

#save changes

df.to_csv('cleaned_data_bkk2.csv', index=False)

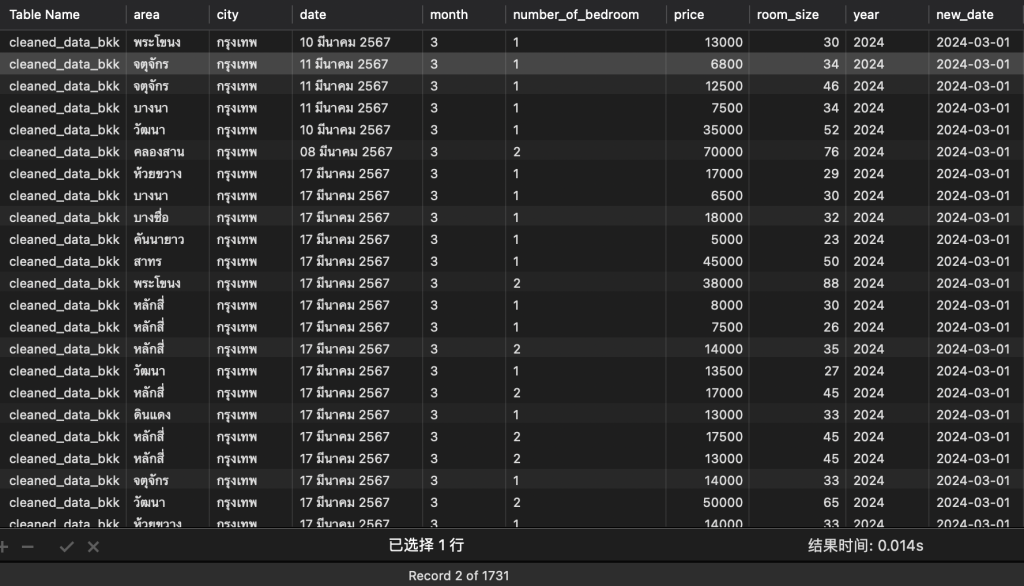

Output: